I started this work by assuming the same thing most low-level GPGPU programmers assume the first time they wire Vulkan for compute: if I can get a device and a queue, I’m basically home. That assumption is wrong! Vulkan is a machine that politely refuses to guess, and compute pipelines will perform exactly what you described, including every mistake you compile into SPIR-V. This post is the first installment of what will end up being my journey through learning Vulkan, and using it to develop compute shaders to perform LLM inference.

For now, I’ll focus on what I managed to get working after much effort: GLU activations – specifically, GELU (tanh variant). All the usual boilerplate is there, but the interesting bits turned out to be shader-side semantics and how SPIR-V interacts with half-precision storage and arithmetic. That’s also where most of the time was spent, unfortunately. They do say how 80% of Vulkan programming is setting up the pipeline.

Getting the minimal runtime to lift its weight

The first concrete step was creating a minimal Vulkan runtime. I made a point of keeping it boring. Create a VkInstance, pick a VkPhysicalDevice, enable what needs enabling, construct a single VkDevice, grab a compute-capable queue family, and carve out a command pool, a primary command buffer, and a fence. I knew descriptors and pipelines would probably add complexity soon enough, so the first milestone was issuing a single dispatch.

The crucial fork was enabling 16-bit storage features at device creation time. The shader sets I borrowed from llama.cpp’s Vulkan backend (which ended up needing a lot of changes, as you will learn later…) use float16_t in SSBOs, and you don’t want to find out after wiring that your device disagrees with you about buffer access. I threaded this through device creation by querying VkPhysicalDevice16BitStorageFeatures and plumbed it as pNext into VkDeviceCreateInfo. The idea was to get half storage working while controlling where half arithmetic is used later, since storage and arithmetic are not the same conversation in Vulkan.

The other design choice was SPIR-V ingestion. I didn’t embed blobs in the binary. The runtime takes a directory and lazy-loads .spv binary files by name the first time a pipeline is requested. That let me iterate on GLSL code and recompile in milliseconds without relinking the extension or rebuilding the world. Later this simplifies correctness debugging too: you don’t have to guess whether your shader changes is in the binary; the runtime reads exactly what you built.

Then came descriptors, pipeline layouts, shader modules, compute pipelines, and descriptor pools/sets. I limited the descriptor set to a single layout and one set per pipeline. No need to overengineer. Three storage buffers for GLU ops was all I needed: A, B, and D.

Push constants, workgroup math, and the first footgun

As soon as I began porting the GLU shaders, I hit my first conceptual wall. The shaders were structured as a head and a main, with glu_head.comp declaring a push constant block and SSBO bindings, and glu_main.comp doing the work with an order-of-operations that looked reasonable until you realise how completely the indexing math depends on correct push constant values.

The push constant block looks like this:

layout (push_constant) uniform parameter

{

uint N;

uint ne00;

uint ne20;

uint mode;

float alpha;

float limit;

} p;

The interpretation is straightforward in English and absolutely unforgiving in practice. N is the total number of output elements, ne00 is twice the hidden size, and ne20 is the hidden size. The head/main split uses gl_GlobalInvocationID to index a 1D logical grid of size N, calculates row and col using a division and remainder module ne20, and from there computes indices into the packed input and compact output. That means any mistake in N, ne00, or ne20, even an innocent different notion of “row-major”, yields an algorithm that faithfully reads and writes the wrong values. I did exactly that at first. I used the token count for N instead of the product num_tokens * d. The shader didn’t crash, of course. It simply slid its window across the wrong rows and columns. This is the sort of error that’s hard to see until you compare against a reference and realize how much your output has been permuted into nonsense.

Fixing it was rather mundane but just cathartic. I forced a mental model: the logical 1D grid you dispatch is not “number of tokens”; it’s “number of output elements”, which is tokens times width. The compute grid is one-dimensional for a reason! The shader’s layout encodes the 2D shape in the push constants. If the mathematics is wrong, you’ve instructed the exact algorithm to operate on the exact wrong region.

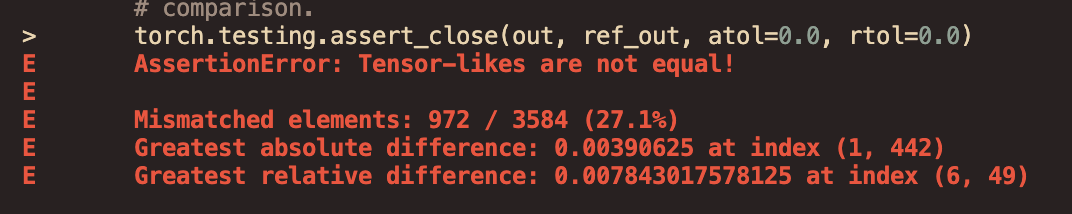

Once I corrected N, ne00, ne20, and computed groups = ceil(N/512) to match the local size, the output stabilized into something recognizable. Not correct yet, mind you, but recognizable. If you want reference numbers, the initial mismatch against the baseline – torch.nn.Functional.gelu(approximate="tanh") – was 99.9% (unfortunately I didn’t take a screenshot of the test at this moment), and now it was down to 27.1%:

The GELU test wouldn’t die

On paper, GeGLU with the tanh approximation is simple. Compute

$$ \text{gelu tanh}(a) = 0.5 , a \left( 1 + \tanh \Big( \sqrt{\frac{2}{\pi}} , \big(a + 0.044715, a^3\big) \Big) \right) $$

then multiply with the other gate. In practice, PyTorch’s fp16 path taught me a different lesson. The formula is the starting point, not the end. The location and direction of dtype conversions matters just as much as the equation itself, and in fp16, it matters a lot.

The shader initially computed the entire GELU in float, multiplied b in float, and wrote out as either fp16 or fp32. The mismatch against PyTorch wasn’t catastrophic. It was insultingly tiny. Max absolute difference of 0.00390625, with almost a third of elements disagreeing by a half-ULP or a single quantization step. Tiny errors everywhere meant the strict-equality tests still failed, because tiny but ubiquitous is exactly what strict equality sees and statistical metrics ignore.

There were two (2) independent problems lurking in this region. The first was formula fidelity. At one point I wrote the intermedite as

const float val = SQRT_2_OVER_PI * a * (1.0f + GELU_COEF_A * a * a);

which is not the canonical tanh approximation. The correct one is

const float val = SQRT_2_OVER_PI * (a + GELU_COEF_A * a * a * a)

A very stupid mistake, one that probably wouldn’t have been made with AI-assisted programming. Right?

Fixing that made some sense of the error distribution, but I still saw mass disagreements in fp16. That led me to the second problem.

Gelu is nonlinear, but the product is where half precision commits to a lattice. If you multiply in float, you’re postponing the irreversibility of quantization until after the most sensitive part, and that doesn’t mirror how PyTorch’s half pipeline tends to behave. The half precision math in practice quantizes at precise boundaries and returns to half for storage and often for the multiply. This is not a compiler’s job; this is the programmer’s job. It must be made explicit, it seems.

The shader version that made strict equality pass looks counterintuitive at first glance, but it’s of course faithful to how the reference behaves. I kept the tanh approximation in float for stability, then cast the nonlinearity to D_TYPE (the output precision), cast the multiplier to D_TYPE as well, and performed the product in D_TYPE. If the output is half, the final multiply becomes a half multiply. If it is float, the product remains float. The choice is explicit and controlled at compile time.

const float val = SQRT_2_OVER_PI * (a + GELU_COEF_A * a * a * a);

const float gelu = 0.5f * a * (1.0f + tanh(val));

// match PyTorch half behavior by multiplying in D_TYPE, not float

D_TYPE gy = D_TYPE(gelu);

D_TYPE by = D_TYPE(b);

D_TYPE py = gy * by;

// return as float for SSBO write if necessary

return float(py);

This exact code annihilated the mismatches. No change to pipeline math, trickery in dispatch, or post-processing. Just a deliberate choice of where the rounding happens.

I tried other variants first. I tried multiplying in float and casting afterwards. I tried forcing the whole GELU path into half. Both were wrong. The first matched less than I needed, the second made the function itself too brittle. The slit version was the only one that mirrored the PyTorch reference numerics across all shapes we care about.

Half storage and the “law of optional arithmetics”

Storage and arithmetic are separate axes in Vulkan. You can expose 16-bit support without opting into half arithmetic. The llama.cpp shaders rely on float16_t SSBO storage and controlled rounding modes (RTE) in SPIR-V. I kept the arithmetic side behind a macro switch so I could explicitly choose where the shader uses half arithmetic and where I leave everything in float until the last moment.

In glu_head.comp I had to enable the half storage extension unconditionally and half arithmetic types conditionally:

#extension GL_EXT_shader_16bit_storage : require

#ifdef ENABLE_F16_ARITH

#extension GL_EXT_shader_explicit_arithmetic_types_float16 : require

#endif

This is essentially how I managed precision without littering the main code with complicated preprocessor diagrams. I refrained from using psuedo-FLOAT_TYPE type in the head/main path because it seemed elegant and turned out to be dysfunctional. The head/main macro is for getting the right indices cheaply, and precision is a per-operator problem.

On the compiler side, the invocations that actually work for half use --target-env=vulkan1.2 and define an RTE shader mode for the f16 variants. Some targets simply do not accept the capabilities this code wants under a Vulkan 1.0 target. The fix was indeed deliberate targeting and not hoping the optimizer is in a good mood today.

The other hard mistakes I made so you don’t have to

I got the descriptor bindings wrong, once. Everything was off by one.

I tried to make the head/main pair generic with a FLOAT_TYPE macro, which exploded in a very GLSL way. Knowing where generality belongs matters; the head/main lane is about indexing and data motion. The operator’s file is where you control dtype behviours. DTYPE casting lives with the op that cares about it.

I got the workgroup count wrong in a couple of places too. I had convinced myself that the shader’s indexing logic would just continue off into the ether and bail out because of the if ( i >= p.N) return n; guard. It does bail out. It also makes you pay in wasted cycles. The right calculation is obvious in hindsight; with a local size X of 512, you dispatch ceil(N/512) workgroups. There’s no reason to do anything else.

At some point after hours of raging at my monitor screen, I thought of hiding precision differences behind a C++ fallback for the fp16 GELU tanh case. It made the tests pass and was fundamentally the wrong thing to do. The whole point of this exercise is to make Vulkan correct, not to use Vulkan when it’s convenient and pretend otherwise when it isn’t. Of course, the C++ implementation had to go.

The Vulkan runtime details that actually mattered

Most of the runtime was gardening. Create a pipeline layout with a push constant range sized to the exact struct in the shader. Create a descriptor set layout with three bindings, all storage buffers, all visible to the compute stage. Create a shader module from the SPIR-V blob. Create a compute pipeline with that module and layout. Allocate a descriptor pool with enough storage buffer descriptors to write three of them. Allocate one descriptor set. Update it with VkWriteDescriptorSet. That’s the whole show.

The choice I made for buffers was to stage through host-visible memory and accept the cost. Vulkan feels strict about unapologetic boundaries, and staging gives you power when debugging. You can look at the bytes. You can copy data in and out of your CPU tensors. You can verify in a Python REPL that what you think you wrote is actually what you wrote. Once the logic is settled, push to zero-copy and imported memory. But build the machine so that correctness is observable first.

Why separate pipeline creation and binding layout concerns

I planned for the set of GLU-like ops to share the same descriptor layout and push constant struct shape. That way the runtime can build one per shader name and cache the whole set. The GPU doesn’t care whether your human brain thinks a STBN pipeline is closely related to a GLU pipeline. Vulkan only cares about layouts and SPIR-V. By keeping the layouts consistent, I can change the shader module and reuse everything else: pipeline layout, descriptor pool/set, and the command buffer sequence.

This matters when you’re comparing variants of an operator that differ only in their nonlinearity. If you keep the binding space constant, the only moving part is the shader body. That isolates the math problem in the shader from the state problem in the driver.

Where this leaves the rest of the kernels

Since I’m writing all this Vulkan code to provide an alternative, universal, vendor-agnostic backend platform to Aphrodite Engine, I need to carefully replace all the (relevant) CUDA kernels to Vulkan equivalents. GLU was of course just the beginning, a mere 1% of the way perhaps. Now that GLU is behaving, I think next on the list is going to be layernorm and positional encoding. RMSNorm isn’t complicated. It needs a careful choice of accumulation precision and a faithful application of scale and epsilon in the same dtype sequence as the reference. The RoPE family has a few head layouts and constant generation details that matter, but the same recipe applies. Get your binding layout correct. Get your push constants precisely aligned with the shader’s expectations. Control dtype conversions explicitly. Choose where you quantize. Do not leave it to “whatever the compiler thinks”.

I will later remove the staging buffers and move to proper interop. Not because is staging is wrong, but because staging is the wrong tradeoff once your logic is correct. Vulkan is explicit enough that the right time to optimize is when you no longer need the bytes for truth verification. I find that time is later than people think.

Well anyways, I’ve already bored you with too much nonsense. The point of the matter is, SPIR-V expresses what you tell it to express. GLSL gives you the fiction of a language (but not the fiction of mercy!). The GPU essentially did what I asked, and forced me to mean exactly what I said.

Next part coming up as soon as I finish RMSNorm and Positional Encoding. Hopefully very soon, before I get nerd-sniped by something else. I’ll leave at this here.

You can track my progress here: aphrodite-engine/aphrodite-engine#1416